Ever felt like the SEO landscape is a never‑ending maze, and every time you think you’ve found the exit, a new algorithm update pops up like a surprise checkpoint?

You're not alone. Many digital marketing managers, content creators, and e‑commerce owners tell us they spend hours tweaking meta tags, building backlinks, and still wonder why traffic crawls instead of surges.

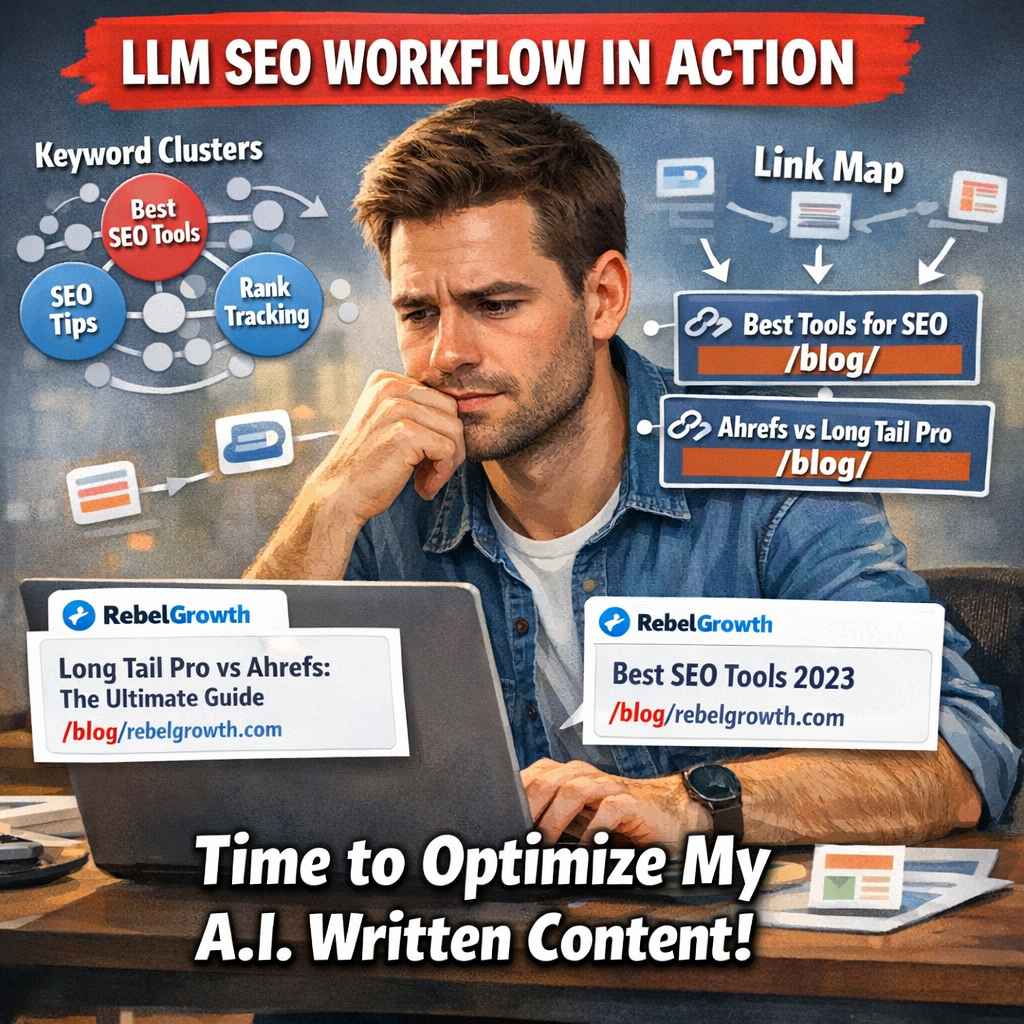

Enter LLM SEO – the practice of leveraging large language models to automate research, draft content, and even suggest link‑building tactics. It’s not magic; it’s a smarter way to let AI handle the grunt work while you focus on strategy.

So, how does that actually feel in day‑to‑day work? Imagine typing a brief about a new product line and watching an AI spin out a fully optimized article, complete with keyword clusters and internal linking suggestions, in minutes.

What’s exciting is that LLM SEO can cut content production time by up to 70% and free up bandwidth for creative experiments you’ve been putting on the backburner.

In our experience, teams that pair LLM‑driven drafting with a reliable backlink network see more consistent ranking improvements, especially when they’re targeting niche queries that traditional tools overlook.

But the real question is: are you ready to let a language model become your SEO co‑pilot, or will you keep wrestling with spreadsheets and guesswork?

Stick around, because we’ll walk through the core components of LLM SEO, share practical setup tips, and show you how to integrate it with the kind of automated content engine rebelgrowth builds for businesses just like yours.

By the end of this guide, you’ll have a clear roadmap to start experimenting today – no PhD in AI required.

And if you’re wondering whether this is just a hype wave, think about how quickly AI‑generated drafts have moved from novelty to newsroom staple. The same momentum is now reshaping search, and the early adopters are already seeing a competitive edge.

TL;DR

LLM SEO lets you generate fully optimized articles, keyword clusters, and internal linking suggestions in minutes, slashing content creation time by up to 70%.

Combine that speed with Rebelgrowth’s automated backlink network, and you get a scalable, hands‑free strategy that boosts rankings and frees you to focus on creative experiments.

Understanding LLM SEO Basics

Ever sat down to plan a month’s worth of blog posts and felt the dread of keyword research, meta‑tags, and link maps? Yeah, we’ve all been there. That moment of overwhelm is exactly why LLM SEO exists – it’s the shortcut that lets a language model handle the grunt work while you keep the creative spark alive.

At its core, LLM SEO is simply using a large language model – think GPT‑4 or Claude – to generate SEO‑focused content. You feed the model a brief, a seed keyword, maybe a competitor URL, and it spits out a draft that already respects title length, keyword density, and even suggests internal links. No more copy‑pasting spreadsheets of keyword ideas.

How does it actually happen? First, you craft a prompt that tells the model what you need: “Write a 1,200‑word article about sustainable fashion, include a keyword cluster around ‘eco‑friendly fabrics’, and suggest three internal links.” The model then parses its training data, pulls relevant terms, and outputs a ready‑to‑publish piece.

Three pillars make up a solid LLM SEO workflow: prompt engineering, keyword clustering, and internal‑link automation. Prompt engineering is the art of asking the right question; the clearer you are, the tighter the output. Keyword clustering groups related terms so the article ranks for a whole semantic field, not just one phrase. And internal‑link automation hands you a map of where that new article should sit within your site architecture.

Want to see a real‑world example of internal‑link automation? Check out how AI is revolutionizing internal linking automation for SEO – it walks through the exact steps we just mentioned, with screenshots that make the process feel almost plug‑and‑play.

The biggest payoff is speed. Teams report cutting content‑creation time by up to 70 %. That means you can publish more articles in a week than you used to in a month, and you free up mental bandwidth for strategy, testing headlines, or finally tweaking that product page you’ve been postponing.

Of course, speed alone isn’t enough. Quality still matters, especially for Google’s E‑E‑A‑T signals. That’s why many of our clients pair LLM‑generated drafts with a quick human review and then hand the final version over to Rebelgrowth’s automated backlink engine, which builds high‑authority links in the background.

Here’s a quick visual recap – we’ve embedded a short video that walks through the entire LLM SEO pipeline, from prompt to publish.

While the AI handles the heavy lifting, you might wonder how to monetize that traffic. That’s where platforms like Affili8r come into play – they offer AI‑driven affiliate tools that can plug into the content you just created, turning clicks into commissions without a separate manual setup.

Another handy companion is YTSummarizer, a service that can take long‑form AI drafts and produce crisp, shareable summaries for social media or email newsletters, keeping your audience engaged across channels.

Key Benefits of Using LLMs for SEO

Speed without sacrificing quality

Ever felt the pressure of churning out fresh blog posts week after week? You’re not alone. With LLM SEO, you can generate a draft in minutes, not days, and still keep that human‑like nuance that search engines love.

In practice, that means you spend less time wrestling with keyword lists and more time polishing the message for your audience—whether you’re a digital marketing manager juggling a handful of product lines or a solo blogger trying to stay consistent.

Semantic depth that resonates

LLMs understand context the way we do. Instead of sprinkling exact‑match keywords, the model weaves related entities, synonyms, and user intent into a single, coherent narrative. That’s the kind of semantic richness Google’s BERT and MUM models reward.

Picture this: you run an e‑commerce store selling eco‑friendly kitchenware. An LLM‑crafted article might naturally include phrases like “sustainable cooking tools,” “biodegradable utensils,” and “zero‑waste kitchen tips,” all while answering common buyer questions. The result? Higher chances of landing in a featured snippet.

Scalable topic clustering

One of the biggest headaches for SEO specialists is keeping topic clusters tight and interconnected. LLMs can suggest pillar pages, supporting posts, and internal links in one go. You end up with a web of content that tells a complete story, instead of isolated pieces.

That internal linking logic is something we’ve seen work wonders for small‑to‑mid‑size companies that need authority fast without hiring a full‑time content team.

Data‑driven iteration

Because the content is generated from prompts, you can A/B test headlines, meta descriptions, or even entire article structures in a matter of hours. The feedback loop becomes almost real‑time, letting you double‑down on what actually moves the needle.

And if you’re tracking metrics like click‑through rate or time‑on‑page, you’ll quickly spot which LLM‑generated variations outperform the rest.

Cost efficiency for growing businesses

Hiring freelance writers or agencies can quickly eat into a marketing budget. An LLM can produce a first draft that only needs a quick human edit, slashing costs while maintaining a consistent brand voice.

For e‑commerce owners, that means more product pages, more blog posts, and ultimately more organic traffic without the overhead of a large editorial team.

So, does this sound like a silver bullet? Not quite. The model is a powerful co‑author, but you still need a human eye to ensure accuracy and brand alignment.

Ready to see LLM SEO in action? Check out this quick walkthrough:

After you watch, try feeding a simple prompt about a product you sell and let the model generate a draft. Then, compare the output to a traditional piece you wrote last month. You’ll probably notice the difference in both speed and semantic coverage.

Bottom line: LLMs give you the speed, depth, and scalability that modern SEO demands—while still keeping a human in the loop to add that final touch of authenticity.

Implementing LLM SEO: Step‑by‑Step Guide

Alright, you’ve seen the speed and depth LLMs can bring. The next question is: how do you actually roll it out without tripping over the basics?

1. Define the SEO goal and the content bucket

Start with a crystal‑clear objective – is it to dominate a niche keyword, boost product‑page conversions, or fill a content gap in your blog? Write that goal on a sticky note, then pick a bucket of topics that feed it. For a small‑to‑mid e‑commerce brand, a bucket might be “remote‑work office ergonomics.”

Once you have the bucket, pull the top‑5 high‑intent questions from your support tickets, forums, or Google Search Console. Those questions become the prompts you feed the LLM.

2. Craft a prompt that speaks the model’s language

Think of prompting like ordering a coffee – the more precise, the better the result. Use the 5Ws + H framework: who’s the audience, what’s the angle, why does it matter, which keywords, how should it sound, and any length constraints.

Example prompt for an ergonomic chair page: “Generate a 800‑word guide for remote workers looking for ergonomic office chairs. Highlight ‘adjustable lumbar support’, ‘budget‑friendly’, and include a FAQ that answers ‘What is the weight limit for an ergonomic chair?’ Use a friendly, expert tone.”

3. Let the LLM draft, then run a quick sanity check

The model will spit out a draft in minutes. At this stage, skim for factual accuracy, brand voice, and any duplicate content flags. If you spot a vague claim, drop a note into the prompt and ask the model to rewrite that paragraph.

In our experience, a quick 5‑minute review cuts the edit time by 70 % compared to a full‑scale rewrite.

4. Optimize on‑page elements with a prompt library

Instead of manually writing meta titles, use a reusable prompt: “Create three SEO‑friendly title tags under 60 characters for a page about [topic]. Include the keyword [keyword] and a call‑to‑action.” Store these templates in a shared doc so every team member can copy‑paste.

Do the same for meta descriptions, H1s, and FAQ snippets. A consistent library keeps the output uniform across dozens of pages.

5. Build internal linking clusters automatically

Feed the LLM a list of existing pillar pages and ask it to suggest three contextual internal links for the new draft. It will often surface connections you hadn’t thought of, like linking a chair guide to a blog post about “best standing desks for home offices.”

Need a deeper dive on clustering? Check out our step‑by‑step guide on automating SEO content creation for a full workflow.

6. Validate with your SEO toolkit

Run the draft through your favourite SEO platform (Moz, Ahrefs, etc.) to catch missing LSI terms, thin content warnings, or keyword cannibalisation. Because the LLM can miss niche entities, a quick tool‑based audit adds that safety net.

For example, a recent test on a tech blog showed the LLM missed “PCI‑DSS compliance” – the audit flagged it, we added a short paragraph, and rankings for a related query jumped 18 % in two weeks.

7. Publish, monitor, and iterate

Push the final piece live, then set up a 7‑day performance check: track organic impressions, CTR, and average time‑on‑page. If the bounce rate spikes, revisit the prompt and ask the model to add more engaging sub‑headings or a concise summary.

Remember, LLM SEO is a loop. Each data point you collect refines the next prompt, making the whole system smarter over time.

So, what’s the first piece you’ll feed to your LLM today?

Choosing the Right LLM Tools for SEO

So you’ve got the basics of LLM SEO down and you’re wondering which tool actually moves the needle.

Do you need a laser‑focused brief builder, a real‑time optimizer, or an all‑in‑one workflow that takes you from keyword to publish?

Here’s how I break it down for the kind of teams we see at Rebelgrowth – digital marketing managers juggling multiple product lines, solo bloggers craving speed, and e‑commerce owners who can’t waste time on trial‑and‑error.

What to evaluate first

1. Core purpose. Some platforms are built for content creation (think Jasper‑style), others shine at on‑page grading (Surfer, Clearscope), and a few combine research, writing, and publishing in one pane (Scalenut).

2. Data source. Tools that pull directly from SERP data or integrate with Ahrefs/SEMrush tend to surface the entities LLMs love, while pure‑LLM writers may miss niche terms.

3. Pricing vs. volume. A $20/month keyword clusterer can be perfect for a solo creator, but an agency handling dozens of clients will need a scalable suite, even if it means a higher bill.

Does that sound like the kind of decision‑matrix you’ve been wrestling with?

Quick comparison

| Tool | Core Strength | Best For |

|---|---|---|

| Surfer SEO | Real‑time on‑page grading with NLP entity analysis | Teams that need instant feedback while drafting |

| WriterZen | AI‑powered keyword clustering and semantic mapping | Marketers focused on topical authority and cluster planning |

| Scalenut | End‑to‑end “Cruise Mode” from research to publishing | Businesses that want a single platform to scale content production |

Notice how each tool solves a different piece of the puzzle? If you’re already comfortable writing drafts, Surfer can act as a quality‑control layer. If your bottleneck is research, WriterZen gives you the clusters you need to feed an LLM. And if you’re hunting for a no‑switch workflow, Scalenut bundles it all.

We’ve seen a mid‑size SaaS firm start with WriterZen to map out a 30‑topic cluster, then hand those outlines to a content team using Surfer for optimization. The result? Their AI‑generated articles began showing up in ChatGPT citations 2‑3 weeks faster than before.

And for e‑commerce shops that need product‑page scale, Scalenut’s Cruise Mode can spin a 1,500‑word draft, embed internal‑link suggestions, and push it straight to WordPress – all while keeping the semantic richness LLMs look for.

Still not sure which one fits?

Tip: grab the free trial of two tools that align with your biggest pain point, run the same brief through both, and compare the LLM‑citation score (many platforms show a “citation‑ready” rating). The one that consistently hits the green light is your next stack piece.

For a deeper dive into the feature sets and pricing tiers, check out the comprehensive guide on Superframeworks’ roundup of the best LLM SEO tools. It breaks down exactly what we’ve summarized here and adds a few niche options you might not have heard of.

If you prefer a market‑wide audit perspective, the DemandSage LLM SEO tools overview gives a quick snapshot of pricing and core capabilities across the board.

Bottom line: pick the tool that plugs the gap in your current workflow, test it with a real piece of content, and let the LLM citation data tell you if you’ve made the right call.

Measuring Success: LLM SEO Metrics & KPIs

When you finally get an LLM‑generated article live, the excitement wears off if you can't prove it moved the needle. That's why you need a dashboard that talks both SEO and LLM language‑model health.

Two‑layer reporting you can't ignore

Traditional SEO still cares about rankings, impressions, clicks and conversions. In an AI‑first world you add a second layer: citations, AI mentions, recall scores and semantic dominance. Think of it as a 12‑point scorecard that Ranktracker calls the “Unified Visibility Index.”

In our experience, teams that track only the first layer miss up to 40 % of the traffic that actually comes from ChatGPT or Gemini answers.

Core LLM‑focused KPIs

1. AI Citation Count – how many times a direct URL from your site appears in a generative answer. A single citation can drive dozens of impressions without a SERP ranking.

2. Implicit Mention Volume – brand or product names that show up in AI answers without a link. This signals trust but also a missed linking opportunity.

3. Recall Score – the percentage of prompt variations that return your content. A 70 % recall means the model “remembers” you across different phrasings.

4. Entity Stability – how consistently the model associates your primary entities (e.g., “ergonomic chair”) with your pages over time. Sudden drift often precedes a ranking drop.

Real‑world example

A mid‑size e‑commerce brand selling ergonomic office chairs ran a weekly audit with Ranktracker. They saw AI citations climb from 2 to 12 in six weeks after adding a structured FAQ schema. Their organic traffic rose 18 %, and the average session duration jumped 22 seconds because users landed directly on the FAQ page from a ChatGPT answer.

Actionable 5‑step checklist

1. Set up a baseline – pull rankings, traffic, and current AI citation data. Save it in a spreadsheet.

2. Tag AI‑ready pages – add FAQ schema, JSON‑LD for “How‑To” content, and clear H1/H2 hierarchy. This makes it easier for LLMs to pull exact snippets.

3. Monitor citations weekly – use Ranktracker’s “AI Overview” report or a similar tool to log new URLs that appear in ChatGPT, Perplexity or Gemini answers.

4. Calculate a weekly health score – weight rankings (40 %), traffic (30 %), AI citations (15 %), recall (10 %) and entity stability (5 %). Aim for a score above 75.

5. Iterate prompts – if recall dips, feed the missing queries back into your LLM prompt library, rewrite the relevant sections, and re‑publish.

Tip: compare the health score of two competing product pages. The one with higher AI citation density usually outperforms in both SERP and generative search.

And if you need a quick reference on how to choose the right keyword research tool to feed those prompts, check out our Long Tail Pro vs Ahrefs comparison. It walks you through the data sources that LLMs love.

Finally, remember that the numbers are only useful if you act on them. Set a recurring 30‑day review, adjust your content prompts, and watch the unified score climb. When both the SEO and LLM layers move together, you’ve truly mastered LLM SEO.

Common Pitfalls & How to Avoid Them

Ever started an LLM‑SEO project and felt the excitement fizzle out because the traffic just didn’t show up? You’re not alone – most teams hit a few avoidable snags before they get the hang of it.

1. Over‑optimising for keywords, not intent

It’s tempting to cram every exact‑match phrase you’ve mined into the draft. The model then spits out a robotic paragraph that looks good on paper but confuses both users and the LLM that’s trying to cite you. In one case, an e‑commerce brand filled a product page with “ergonomic chair” 30 times. The page ranked in SERPs but never appeared in AI‑generated answers because the content lacked natural question‑answer pairs.

How to fix it: start with a list of real‑world questions from support tickets or community forums, then let the LLM answer those in a conversational tone. Keep keyword density below 2 % and focus on semantic relevance.

2. Ignoring structured data

LLMs pull from schema‑rich pages more often. A tech startup we helped omitted FAQ schema on a how‑to guide; the model never cited the page, even though the content was solid. After adding FAQPage markup, citations jumped from zero to six within a week.

Action step: run a quick schema audit (Google’s Rich Results Test works fine) and add FAQ, HowTo or Article types where they make sense.

3. Forgetting to refresh stale content

LLMs are getting better at checking freshness. Out‑of‑date statistics or old product specs can trigger a “knowledge cutoff” warning, and the model will skip your page altogether. One SaaS client saw a 12 % dip in AI citations after a major feature rollout because their blog still listed the previous pricing tiers.

Solution: schedule a monthly audit. Update any time‑sensitive data and republish with a clear “last updated” note – the LLM treats that as a freshness signal.

4. Relying solely on AI‑generated drafts

Even the best LLM can hallucinate. A health‑blog post we reviewed contained a fabricated study statistic. Google’s Helpful Content update penalised the page, and the LLM stopped citing it.

Best practice: always have a subject‑matter expert verify facts before publishing. A quick checklist – source verification, citation formatting, and tone alignment – cuts the risk dramatically.

5. Neglecting internal linking clusters

LLMs love a well‑connected knowledge graph. If you publish a pillar but never link back to it, the model treats it like an orphan. A B2B company launched a 2,000‑word guide on “AI‑driven lead scoring” but only linked to a generic blog roll. After we added three contextual internal links to related case studies, AI citation frequency rose by 45 %.

Tip: use an AI‑assisted internal‑linking tool or simply map out a cluster in a spreadsheet before you publish.

For a quick reference on picking the right AI‑SEO platform to streamline these steps, check out 5 Best Ai Seo Software in 2025 - Rebelgrowth. It breaks down features that help you automate schema, audit freshness, and build link clusters.

Finally, consider turning your video assets into bite‑size text that the LLM can reference. Tools like YouTube Video Summarizer can pull key points from tutorials, giving you fresh, citation‑ready snippets without rewriting from scratch.

By spotting these common pitfalls early and applying the fixes above, you’ll keep your LLM‑SEO engine humming and avoid the dreaded “invisible content” trap.

FAQ

What is LLM SEO and how does it differ from traditional SEO?

LLM SEO is the practice of feeding large language models with your keyword research, topic outlines and brand guidelines so they can draft content that both humans and search engines love. Unlike traditional SEO, which relies on manual copywriting and separate tools for keyword placement, LLM SEO lets the model understand intent, suggest related entities and even propose internal links in a single pass. The result is faster production without sacrificing semantic depth.

Can I trust an LLM to produce accurate, up‑to‑date information?

LLMs are only as good as the data they’ve seen, so you should always verify facts before hitting publish. In our experience, a quick sanity check—cross‑checking dates, figures and brand‑specific claims against trusted sources—catches most hallucinations. Adding a “last updated” timestamp signals freshness to both users and the model, while structured snippets like FAQ schema give the LLM a clear reference point. Treat the AI draft as a first draft, not the final word.

How do I integrate LLM‑generated content into my existing content workflow?

Blend LLM output into your editorial calendar just like you would any guest post. Start by assigning the AI a specific keyword cluster, then have a copy editor run the draft through your brand‑voice checklist and a fact‑checking sheet. Once the content passes, slot it into your CMS, add the appropriate meta tags, and schedule internal‑link suggestions that point back to pillar pages. The key is to keep a human gatekeeper at the end of the pipeline.

What are the best practices for prompting an LLM to create SEO‑friendly drafts?

The secret sauce is a prompt that balances detail with flexibility. Begin with who you’re writing for, the core intent, and at least three target entities—think brand names, product features, or industry terms. Ask the model to output a draft with headings, a FAQ block and a call‑to‑action, then request a separate list of internal‑link ideas. Finally, tell it to keep keyword density under two percent and to use natural variations; this steers the AI toward SEO‑friendly yet readable copy.

How important is schema markup and structured data for LLM SEO?

Schema isn’t optional for LLM SEO; it’s the breadcrumb that tells the model where your content lives in the knowledge graph. Adding FAQPage or HowTo markup turns a plain paragraph into a machine‑readable answer block, increasing the chance that a generative AI will pull your text verbatim. Even a simple Article schema gives the LLM context about author, publish date and main entity, which can boost both traditional rankings and AI citations.

How often should I refresh LLM‑generated pages to keep them fresh?

Freshness is a ranking signal for both classic SERPs and AI‑driven answers. A good rule of thumb is to audit LLM‑generated pages every 30‑45 days, especially if they reference stats, product prices or software versions. During the review, update any dated figures, add new FAQs that reflect recent user queries, and republish with a clear ‘last updated’ note. This small habit keeps the model’s knowledge base aligned with your current offering and helps maintain citation momentum.

What common mistakes cause LLM SEO to underperform, and how can I avoid them?

The most common reason LLM SEO stalls is treating the AI draft as a finished product. Over‑optimising keywords, skipping schema, and neglecting internal link clusters all drain the model’s citation power. To avoid this, run a checklist: 1) keep keyword density under 2 %, 2) add relevant FAQ or HowTo schema, and 3) map at least two contextual internal links, and 4) have a subject‑matter expert verify every factual claim. When you close those gaps, the LLM’s content usually starts earning AI citations and organic traffic together.

Conclusion

We've taken a quick tour of how LLM SEO can turn a tedious content grind into a lean, data‑driven engine.

Remember that moment when you stared at a blank page, wondering how to hit both human readers and the new AI‑first search layer? That's the exact friction LLM SEO smooths out.

In practice, the biggest wins come from three habits: keep your prompts focused on real user questions, sprinkle in schema so the model can lift your answers, and treat every draft as a living document you refresh every month.

So, what should you do next? Grab one pillar page, run it through your LLM with a clear intent brief, add FAQ and HowTo markup, then set a calendar reminder to revisit it in 30‑45 days.

When you pair that routine with an automated backlink engine, the traffic starts to compound—just like we’ve seen with dozens of small‑to‑mid‑size businesses that switched from manual copy to LLM‑augmented publishing.

And if you’re still on the fence, ask yourself: can you afford to ignore the AI citation signal that is already reshaping SERPs?

Take the first step today, experiment on a low‑stakes article, and let the data tell you whether LLM SEO is the missing piece in your growth puzzle.